As the IPv6 migration moves on, more and more Internet providers use IPv6 addresses for their customers to connect them. Due to the fact that the provider is doing the translation to enable the communication to the legacy IPv4 Internet, it may affect some VPN clients or VPN connections. At least I personally know some guys who are not able to remotely connect to their dynamic DNS-resolution driven home base anymore. So if Internet access is now based on IPv6 what happened then to companies which use the internet connectivity of their employees for e.g. home office? In other words: a home office got now an IPv6 connection and the users need to communicate via a secure line to corporate data and vice versa. And of course how will this scenario fit into a corporate IPv4 to IPv6 migration strategy?

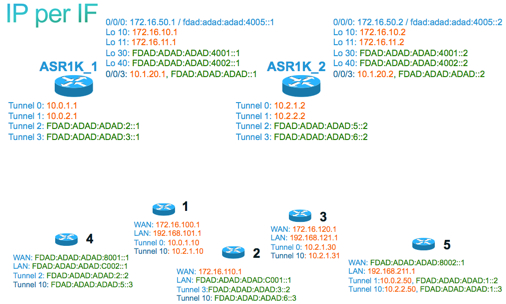

Anyhow this inspired me to test a few scenarios with real hardware. My scope was simple: We have a DualStack (IPv4 and IPv6) enabled HQ and branches which are connected via IPv6 or IPv4 to the internet. Secure communication needs to be established and branches should communicate with other branches.The transport protocol could be old-fashioned IPv4 or modern IPv6. Then it needs to find its place into a migration scenario where all branches will be IPv6 enabled in the future regardless of the underlying internet connection. So a tunnel protocol should also be used. Now, turning the first ideas into a concept and a few drawings later I got my use cases:

|

|

| concept of a use case | final design scenario |

The idea is: having in some branches IPv4 and in finished migrated branches IPv6 as protocol of daily use. Now it could happen that in IPv6 branches still IPv4 is given from the Internet provider or that in IPv4 branches IPv6 must be used to connect to the internet. The best case: IPv6 as branch and IPv6 as transport protocol via the internet is not everywhere right now. So finally I decided to go for 5 scenarios. Dual Stack enabled branches did not make sense at this time, because the host will use either IPv4 or IPv6 and the only difference will be: two different tunnel types on one router (with failover scenarios means: four tunnels) which will be already tested in the use cases 1 – 5. So no real functionality testing for this special case on the router. The connection and functionality from router 1 and 3 are the same, just needed to test the Dynamic Multipoint VPN (DMVPN) config and if routing protocol neighborship will also be established via one tunnel interface on the headend. So let’s move on into a more technical approach:

After the design phase there is always the part where it comes to „hands-on“. I used two Cisco ASR 1001 routers as headend, one Cisco Catalyst 3750-X switch for simulating the backbone and a bunch of Cisco 800er series routers. Besides the fact that structured cabling is not one of my personal strength the whole picture looked like this (and I forgot to mention a good old Cisco 2509 as a console server):

LAB at its final stage

But anyhow: it worked because I always use a cabling plan and a up-to-date documentation which router port is connect to which VLAN on a switch port. So, if it looks always like Spaghetti and cabling is not your strength, you must become an expert in documentation :-).

Before I go into the detailed config I just want to share my key findings:

- On the headend routers (Hub routers) use Loopback interfaces as source for the tunnel interfaces. Otherwise you will come to that point where IKE policies mess up a little bit and a tunnel interface goes down without any obvious reason when another tunnel comes online

- Use Cut&Paste whenever possible — typos are hard to find especially when you have to troubleshoot the crypto config

- Plan your IP address design carefully. Every tunnel group needs its own subnet.

- Plan an additional routing subnet at the headend. Loopback interfaces needed to be reachable via the public network.

- Different tunnel interfaces with different address families are working great and the whole thing is very stable.

- Overall: one of many fantastic solutions if you have to migrate to IPv6.

I will save the details but I have to mention that internal interfaces are not reachable to the outside interfaces of other routers in my lab. They have to connect via the tunnel interfaces from the DMVPN. (aka: routes are not known) EIGRP config is also not given in this post, but EIGRP is enabled on all the routers (IPv6 and IPv4). Simulated internet routing is done via static routing, just to control the EIGRP routes without route policies, etc ..

For simplifying reasons I decided to go for the same crypto config for all the routers and tunnel interfaces. Here is the crypto config I used in my lab:

|

crypto ikev2 proposal prop_ikev2 |

crypto ikev2 profile pro_ikev2 |

I’ve learned the hard way: After a long troubleshooting session in IPSec why a SA was not established, I found a simple typo which prevented the traffic flow from remote to the headend :-). So I decided always to use cut&paste whenever possible for the remaining config work on the routers. And another thing to mention: I always used a different crypto ipsec profile for each tunnel interface. This gives me a little more flexibility than the shared one. At this point I also have to emphasize to use Loopback interfaces as tunnel endpoints in your hub routers, even if you have enough external facing interfaces left. But if you configure a dual stack interface the crypto engine for different tunnels as an endpoint will mess up. This is also mentioned on the Cisco WebSite as best practice design, but it seems that I missed reading that one before doing my lab 🙂 …

To increase the readability of the configuration files I usually use a map which shows the IP-addresses per router per interface:

IP address per interface per router

So first tunnel interface config and first corresponding router which was plain IPv4. No magic at all:

| crypto ipsec profile IPSEC_profile set transform-set dmvpn_set set ikev2-profile pro_ikev2 interface Loopback10 interface Tunnel0 |

crypto ipsec profile IPSEC_profile interface Tunnel0 |

| hub tunnel configuration first ASR 1001 | first tunnel configuration spoke 1 |

And of course to have redundancy once a hub router has to have e.g.: a maintenance downtime, I used a second ASR 1001 for a second tunnel termination on the spoke side. So I created an additional tunnel interface on the spoke router. To guarantee a failover I used EIGRP as a routing protocol. Once a headend router tunnel interface was not reachable, EIGRP did a great job in failover to the second available tunnel to the additional headend router. Roughly one PING was lost during failover. And of course you can use both tunnel with „Equal Cost Routing“, this means: both routes were established in the routing table with the same cost. so the packet could go both ways.

|

crypto ipsec profile IPSEC_profile interface Loopback10 Interface Tunnel0 |

crypto ipsec profile IPSEC_profile10 interface Tunnel10 |

| hub tunnel configuration second ASR 1001 | second tunnel configuration spoke 1 |

After successfully establishing the first tunnels we have to bring in a little more complexity to raise the fun level :-). The first IPv6 use case: IPv6 is the only way to connect to the internet for some branches and the protocol used in these remote locations is still IPv4. This means IPv4 has to be tunneled via an IPv6 DMVPN und all the IPv4 branches, some still connected with IPv4 should communicate to each other. So basically a new tunnel interface must be created and this must reachable via IPv6. Inside the crypted tunnel IPv4 must be used and the routing neighborship must also be established to the headend.

| crypto ipsec profile IPSEC_profilev6_1 set transform-set dmvpn_set set ikev2-profile pro_ikev2v6 interface Loopback30 no ip address ipv6 address FDAD:ADAD:ADAD:4001::1/64 interface Tunnel1 ip address 10.0.2.1 255.255.255.0 no ip redirects ip mtu 1400 no ip next-hop-self eigrp 100 no ip split-horizon eigrp 100 ip nhrp authentication ciscov6 ip nhrp map multicast dynamic ip nhrp network-id 101 ip nhrp holdtime 600 ip nhrp shortcut ip nhrp redirect delay 1000 ipv6 address FDAD:ADAD:ADAD:1::1/64 no ipv6 next-hop-self eigrp 200 no ipv6 split-horizon eigrp 200 tunnel source Loopback30 tunnel mode gre multipoint ipv6 tunnel key 9999 tunnel path-mtu-discovery tunnel protection ipsec profile IPSEC_profilev6_1 |

crypto ipsec profile IPSEC_profilev6 interface Tunnel1 |

| hub tunnel configuration first ASR 1001 | first tunnel configuration spoke 5 |

… and the second tunnel for redundancy purpose:

|

crypto ipsec profile IPSEC_profilev6_1 interface Loopback30 interface Tunnel1 |

crypto ipsec profile IPSEC_profilev610 interface Tunnel10 |

| hub tunnel configuration second ASR 1001 | second tunnel configuration spoke 5 |

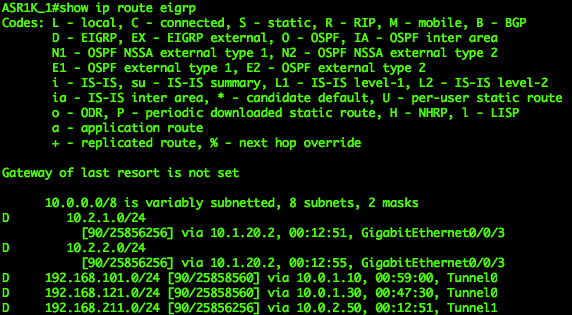

And the IPv4 EIGRP neighborship looked great. All routes via the Tunnel interfaces were up and the neighborship was established to both routers. So scenario 3+5+1 was up and running. Just to mention: the dynamic part to the DMVPN is only working inside a tunnel interface. In these combined scenarios we have different tunnel interface characteristics. This means: communication to different branches terminating on different tunnel interfaces must be routed via the headend. (So you can’t build a DMVP from an IPv4 to an IPv6 address).

|

|

| EIGRP IPv4 routes on Hub 1 | EIGRP IPv4 routes on Hub 2 |

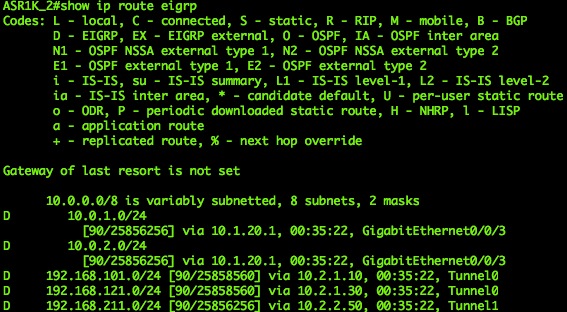

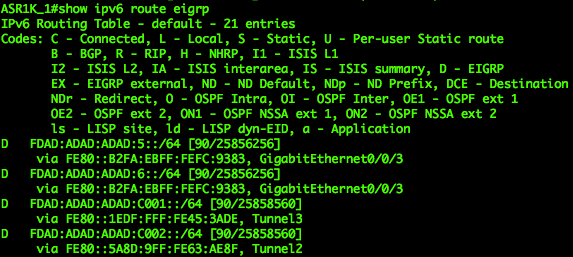

Next: I used IPv4 as a transport protocol over the simulated Internet and the branch was already migrated to IPv6 for their communication to the HQ. This could be easily the case were you use your existing Internet connection is still based on IPv4 and the branch should be migrated to IPv6, because it fits into your timetable. Migration (dual stack) in the HQ is already in place and communication can be established to the important systems (case 2).

|

crypto ipsec profile IPSEC_profile_3 interface Loopback11 interface Tunnel3

|

crypto ipsec profile IPSEC_profile interface Tunnel3 |

| hub tunnel configuration first ASR 1001 | first tunnel configuration spoke 2 |

|

crypto ipsec profile IPSEC_profilev6_2

set transform-set dmvpn_set

set ikev2-profile pro_ikev2v6

interface Loopback40

no ip address

ipv6 address FDAD:ADAD:ADAD:4002::2/64

interface Tunnel2

no ip address

no ip redirects

no ip next-hop-self eigrp 100

no ip split-horizon eigrp 100

delay 1000

ipv6 address FE80:2001:: link-local

ipv6 address FDAD:ADAD:ADAD:5::2/64

ipv6 mtu 1400

ipv6 nhrp authentication ciscov6

ipv6 nhrp map multicast dynamic

ipv6 nhrp network-id 202

ipv6 nhrp holdtime 600

ipv6 nhrp shortcut

ipv6 nhrp redirect

ipv6 eigrp 200

no ipv6 next-hop-self eigrp 200

no ipv6 split-horizon eigrp 200

tunnel source Loopback40

tunnel mode gre multipoint ipv6

tunnel key 44444

tunnel path-mtu-discovery

tunnel protection ipsec profile IPSEC_profilev6_2

|

crypto ipsec profile IPSEC_profilev710

set transform-set dmvpn_set

set ikev2-profile pro_ikev2v7

interface Tunnel10

no ip address

no ip redirects

delay 1000

ipv6 address FDAD:ADAD:ADAD:5::3/64

ipv6 mtu 1400

ipv6 eigrp 200

ipv6 nhrp authentication ciscov6

ipv6 nhrp map multicast FDAD:ADAD:ADAD:5::2

ipv6 nhrp network-id 202

ipv6 nhrp holdtime 600

ipv6 nhrp nhs FDAD:ADAD:ADAD:5::2 nbma FDAD:ADAD:ADAD:4002::2 multicast

ipv6 nhrp shortcut

ipv6 nhrp redirect

tunnel source GigabitEthernet0

tunnel mode gre multipoint ipv6

tunnel key 44444

tunnel protection ipsec profile IPSEC_profilev710

|

| hub tunnel configuration second ASR 1001 | second tunnel configuration spoke 2 |

|

|

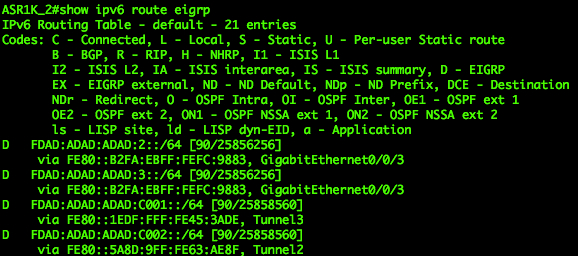

| EIGRP IPv6 routes on Hub 1 | EIGRP IPv6 routes on Hub 2 |

|

|

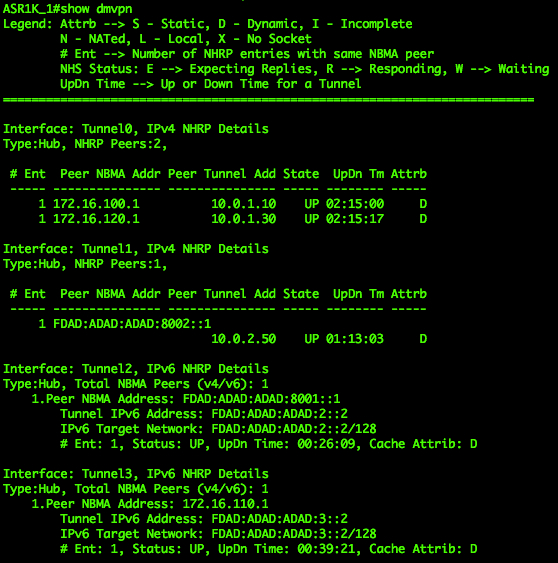

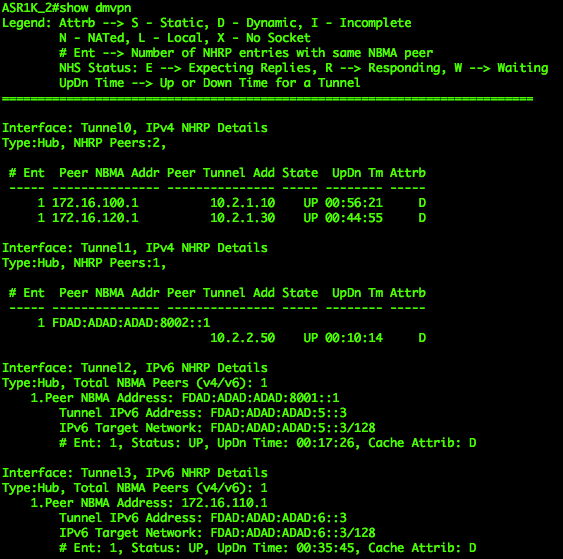

| DMVPN on Hub 1 | DMVPN on Hub 2 |